Data centers are important structures that hold vital information for businesses, schools, public agencies, and private individuals. Electrical, power, and lighting systems play a key role in the design of these data centers.

Respondents

Tim Chadwick, PE, LEED AP, President, AlfaTech Consulting Engineers, San Jose, Calif.

Robert C. Eichelman, PE, LEED AP, ATD, DCEP, Technical Director, EYP Architecture & Engineering, Albany, N.Y.

Barton Hogge, PE, ATD, LEED AP, Principal, Affiliated Engineers Inc., Chapel Hill, N.C.

Bill Kosik, PE, CEM, LEED AP, BEMP, Building Energy Technologist, Chicago

Keith Lane, PE, RCDD, NTS, RTPM, LC, LEED AP BD+C, President/Chief Engineer, Lane Coburn & Associates LLC, Seattle

Robert Sty, PE, SCPM, LEDC AP, Principal, Technologies Studio Leader, SmithGroupJJR, Phoenix

Debra Vieira, PE, ATD, LEEP AP, Senior Electrical Engineer, CH2M, Portland, Ore.

CSE: What PUE goals have clients asked you to achieve in difficult situations? Describe the project.

Chadwick: Our typical data centers are being designed at a peak PUE of 1.3 (to size infrastructure). However, we are seeing annualized performances of PUE in the ranges of 1.07 to 1.20. These low PUEs have required unique design solutions that have relied on new technologies or cooling and power strategies not applied on the scale of the proposed projects. We have been successful at achieving documented, operating PUEs of as low as 1.06 to 1.09 with various Facebook data center sites.

Hogge: In our experience, the PUE metric itself isn’t the challenge as much as providing education to understand the difference between a meaningful PUE result and a short-term view. We’ve been asked for low-maximum snapshot partial PUE as opposed to an annualized number. We’ve been challenged to deliver a low PUE with a very low IT load on Day 1, although equipment has been deployed for final build-out. Topologies that right-size and plan for growth in a modular fashion help this challenge, but honest conversation about the "why" and the "how" for setting up and analyzing PUE metrics usually leads to an informed client who embraces the best parts of this initiative.

Lane: It is important to clarify the difference between maximum and average PUE. The maximum PUE will be the worst-case design day and failure mode—and will include UPS battery recharge that will dictate the size of the electrical equipment to ensure that at no time the electrical system will be overloaded. The average PUE is a better indicator of the running efficiency and running costs of a data center, but both maximum and average PUE must be considered during the design and implementation of a data center. We have been involved in data centers that have incorporated many electrical and mechanical efficiencies (efficient UPS and transformer, 230 V server distribution, hot-aisle containments, warmer cold aisles, increased delta T, and outside air economizers) that are seeing peak PUEs less than 1.3 and average PUEs of less than 1.2. Be wary of false PUE claims that don’t take all losses into consideration. Also, be sure to understand both maximum and average PUE, as they are both critical to understanding the efficiency and the requirements of the electrical distribution system.

Eichelman: As energy efficiency requirements continue to become more stringent, maximum PUE values are being reduced. Federal government facilities are now required to have PUEs of no more than 1.5 for existing facilities and between 1.2 and 1.4 for new facilities, which is fairly consistent with the requirements for most data center owners. To achieve these requirements, very efficient electrical and HVAC equipment and distribution are required. Typical approaches include use of high-efficiency UPS systems and transformers, water- or air-side economizers, high-temperature chilled-water systems, rack- or aisle-level containment systems, direct water-cooled IT equipment, and containerized data center solutions.

One of the main challenges in meeting an aggressive PUE requirement relates to the ramp-up of the IT deployment. As a data center module comes online, the PUE may be very high, as there is often little equipment initially installed on the floor while the corresponding infrastructure is fully built-out. As equipment is added, the PUE continues to fall with the lowest PUE being achieved at full build-out. To reduce these inefficiencies, a modular infrastructure is needed. This infrastructure should be rapidly deployable and planned to match the next IT installation as much as possible.

Sty: The National Renewable Energy Laboratory (NREL) Energy Systems Integration Facility (ESIF) established a PUE goal of 1.06 to all of the design-build teams pursuing the project. In addition, NREL had established requirements to use the waste heat from the high-performance computing data center to provide heating in other areas of the building. The PUE goal was achieved, in part, by the use of direct water-cooled IT cabinets that accept entering water temperatures of 75°F, and leaving water temperatures close to 100°F. This allowed the use of direct evaporative water-side economizer cooling and eliminated the need for mechanical refrigeration. The return water is used for preheating laboratory make-up air heating coils and radiant heating in the offices, which even further reduced the need for the cooling towers. The NREL ESIF facility achieved LEED Platinum using these strategies.

CSE: Describe a recent electrical/power system challenge you encountered when working on a data center project.

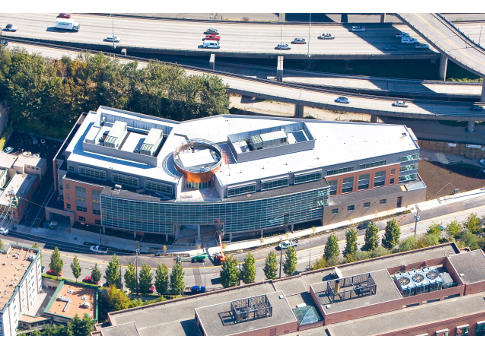

Vieira: A high-density data center, located in the Pacific Northwest, with an incredibly small footprint was scalable with multiple electrical services. The distribution substations along with the generators were located outdoors. There were multiple 1600-amp concrete-encased duct banks, which were increased in size due to the client’s request to use aluminum conductors. These duct banks were further increased in size due to thermal heating associated with the burial depths and proximity to other duct banks. The design team struggled to physically fit all of the duct banks within the data center footprint. Extensive coordination with the electrical contractor, engineer, and equipment vendors was required.

Hogge: We recently designed a data center where the electric utility was not experienced with this sort of customer. The design team worked closely with the utility to alter policy to allow the service switchgears and backup power distribution system to operate in a flexible and seamless transfer. This highlighted the importance of involving the utility company during the concept design stage and clearly articulating how you intend to operate the power distribution system, sometimes detailing the design downstream of a service transformer.

CSE: How have cabling and wiring systems changed within data centers?

Eichelman: Traditionally, IT equipment has been powered from single-phase 120 or 208 vac, 20- or 30-amp branch circuits. These circuits would run from receptacles located under the computer room’s raised-access floor back to a remote-power panelboard RPP, also located in the computer room. As data centers continue to evolve to address the growing IT equipment power densities and the increased requirements for reduced PUE, the power distribution has responded as well. 208-vac, 3-phase, 60-amp feeds to in-rack PDUs are common in medium- to high-density environments. In some cases, 415/240 vac, 3-phase feeders to the in-rack PDUs are also being used to reduce the quantity and/or rating of the branch circuits and corresponding connectors and PDUs that a particular cabinet may require. High-performance computing equipment sometimes requires direct 480 V, 3-phase feeds. Overhead distribution from a busway system with plug-in circuit breakers is also common. These are particularly useful in facilities with no raised floor or in facilities where IT equipment requires frequent changes or rapid deployment, but careful coordination with overhead cable tray and aisle- or rack-containment systems is necessary. Data center distribution systems have been introduced to increase energy efficiency and reliability, but have not yet gained widespread acceptance in the U.S.

Lane: There have been a number of data center failures over the past decade based on underground electrical duct bank overheating. In many cases, the original engineers either did not provide appropriate Neher-McGrath duct-bank heating calculations or did not properly calculate the Rho value of the soil, the burial depth of the conduits, and/or the eventual moisture content of the soil. As a result, we are seeing larger underground electrical feeders, engineered backfill, or the routing of feeders overhead instead of underground to avoid future failures. Understanding the unique soils that are being used on a specific project, the routing configuration, mutual heating, the load and load factor, and providing Neher-McGrath calculations early in the design process is important. Design changes as a result of the calculations, and the cost impacts should be considered before construction.

Chadwick: We have seen power distribution systems that vary from conventional pipe and wire systems to busway and, in some cases, cable bus. As we evaluate the design options, conditions such as field variation and local skilled labor force can impact the design decision. We are typically seeing overhead bus for power distribution in data centers.

CSE: How do you work with the architect, owner, and other project team members to make the electrical/power system both flexible and sustainable at the same time?

Sty: The low-hanging fruit is the installation of high-efficiency transformers and UPS components. The electrical system responds to and supports the loads in the facility, so our team really tries to understand the initial IT design load, plus any future expansion, to right-size mechanical equipment. Proper equipment selection can drive operation at the highest efficiency. HVAC unit fan speeds are controlled through pressure-differential measurements to match the IT server fan draw, which can save large amounts of energy with the reduction in operating horsepower. One example of flexibility in the power distribution is the University of Utah Center for High Performance Computing, where the electrical busway can operate on either 120/208 V or 480 V, depending on the server installation.

Hogge: Developing a realistic load profile and growth rate with the owner can be a challenging task, but it is the best approach to sizing electrical equipment to meet the need with a topology that allows sustainable modular growth to meet the IT need. This will lead to maximum operating efficiency of the electrical equipment. When working with architects and planners, the engineer must envision the final build-out and work backward to space plan and, just as important, generate a plan for future installation of equipment without major risk or disruption to the facility operation.

Vieira: Quite a bit of effort is spent learning how the owner and architect plan to expand and grow the facility over its lifetime. Each owner defines sustainability differently, therefore, it is important to understand what sustainability means to the owner. The electrical team learns how the owner wants to increase cabinet count and density, if the owner wants changes in reliability or use of renewable energy, and how the owner intends to staff and operate the facility. Based on these answers, coupled with knowledge of the mechanical and control systems, different electrical topologies can be developed that allow for flexibility, growth, and sustainability. Each topology has pros and cons, which are reviewed with the owner prior to finalizing a design.

Chadwick: We have incorporated modular systems with depressed slabs for electrical skids. We often include expansion areas for increasing the electrical power capacity of the facility to allow for growth or for "future-proofing" the infrastructure. In some cases, the ceiling structure of the data center is overdesigned to allow for adding future busway and supporting it from the grid structure.

Lane: Close coordination with the MEP engineer and architect is important to ensure the owner’s requirements and goals are properly implemented. Coordination of appropriate electrical spaces and pathways with the architect must occur to ensure there is enough space for future expansion of both capacity and redundancy. Close coordination with mechanical engineering is also important to fully understand the initial and full build-out of the mechanical-load requirements, to properly size the electrical system for initial and full build-out. Additionally, the electrical engineer must be fully aware of future mechanical loads and locations, as it may be more cost-effective to get future conduits in place during the initial construction phases of the project. The BIM/ Revit process is driving close coordination of the trades and will help ensure a fully integrated project.

CSE: What types of Smart Grid or microgrid capabilities are owners demanding, and how have you served these needs? Are there any issues unique to data center projects?

Hogge: More of our clients are investigating becoming a distributed-generation customer to both assist in a grid emergency as well as receive a rebate. The ability to parallel for an extended time and export to the grid can guarantee power contribution as opposed to islanding and only shedding what the facility is demanding at that time. This can be an effective way to use redundant generator capacity to assist the community and save on the monthly bill.