Designing solutions for data center clients — whether hyperscale or colocation facilities — requires advanced engineering knowledge

Respondents

- Bill Kosik, PE, CEM, BEMP, senior energy engineer, DNV GL Technical Services, Oak Brook, Ill.

- John Peterson, PE, PMP, CEM, LEED AP BD+C, mission critical leader, DLR Group, Washington, D.C.

- Brian Rener, PE, LEED AP, principal, mission critical leader, SmithGroup, Chicago

- Mike Starr, PE, electrical project engineer, Affiliated Engineers Inc., Madison, Wis.

- Tarek G. Tousson, PE, principal electrical engineer/project manager, Stanley Consultants, Austin, Tex.

- Saahil Tumber, PE, HBDP, LEED AP, technical authority, ESD, Chicago

- John Gregory Williams, PE, CEng, MEng, MIMechE, vice president, Harris, Oakland, Calif.

CSE: Are there any issues unique to designing electrical/power systems for these types of facilities? Please describe.

Tousson: Modeling of the electrical system is a critical component for designing electrical/power systems for a supercomputer. It is extremely challenging to perform a transient analysis for high-density load that varies by 4 megawatts of power in less than 1/4 cycle in some instances. This results in voltage drop and wave form distortion beyond some auxiliary equipment tolerances that can lead to interruption of service and premature failure. Selecting auxiliary equipment that can ride through such extreme conditions is crucial to providing a viable design solution.

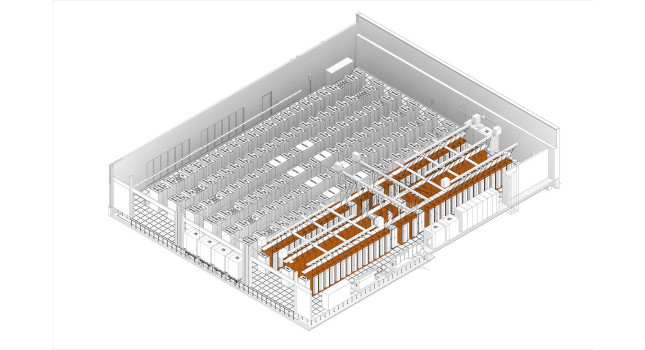

Rener: Power densities are extremely high in most data centers compared to other facilities. In high-performance or supercomputing environments its even higher. Getting this much power to the racks is a challenge often require significant local power distribution panels around the and extensive us of busways.

Peterson: Data centers have always been designed with power equipment and distribution systems that are highly reliable. For modern data centers we have answered the call to scale the amount of equipment that is installed, opting for more robust distribution systems that allow for a reduced number of generators and UPS systems to decrease costs.

Starr: Because data centers are high density, operational expense is sometimes a higher priority than the initial capital expense. Data centers use criteria like PUE to benchmark their efficiency and the design team is very much involved with ensuring the owner that PUE will be met. This means specifying energy efficient equipment, applying ASHRAE 90.4 and making sure instrumentation is included within equipment to aggregate metrics for owner dashboards. Beyond the investment in building and running a data center, there is a cost to properly maintain, which the average building figures out after occupancy.

Maintenance and operations must be a fundamental consideration for mission critical facilities in the design process. System topologies such as Uptime Institute Tier I through IV establish levels of concurrent maintainability and fault tolerance. We continuously remind the project team of how costly unplanned outages are. Data center designs are usually phased to allow quick deployment of future phases. Specialty equipment that is found in abundance in these types of facilities includes large UPSs, static transfer switches, power distribution units, rack power strips, quick-connect cabinets and significant energy storage.

CSE: What types of unusual standby, emergency or backup power systems have you specified for data centers? Describe the project.

Peterson: Lithium-ion battery systems have been quickly implemented for data centers, though not without challenges regarding different requirements for access, clearance and safety stipulated by code officials that follow the new NFPA 855 standard. In other locations diesel rotary UPS systems have been requested by clients to increase overall efficiency with fewer components with much less chemical disposal or replacements over the equipment lifetime.

Rener: Most data center back up needs are standby only from a code standpoint and true emergency power needs are small. We often prefer a simple dedicated emergency generator separate from larger standby generators. With increasing power densities and desires for improved energy efficiency we are seeing increased use of medium-voltage (15 kilovolts) standby generators over 480 or even 415 volts.

CSE: What are some key differences in electrical, lighting and power systems you might incorporate in this kind of facility, compared to other projects?

Starr: Industrial- and science-type buildings also use overhead busway, but instead of feeder busway, plug-in busway is almost exclusively the product choice in data centers. Plug-in busway allows for changing of the tap box without having to de-energize the entire bus. Use of STSs in data centers has declined because the rise of dual corded power supplies, but I still advocate for them, especially in “unmanned” sites. Rack power strips (also referred to as rack PDUs) and floor-standing PDUs are standard data center practice not used in most other building applications. I also seek to include ATS and UPS remote annunciators accessories because they are low-cost at bid and provide operations with another opportunity to monitor their critical loads.

Peterson: Our approach to data center power systems and equipment follows the tenant of energy efficiency and reliability. We work with clients and vendors to find the best lifetime value then aim to find other efficiencies when replicating in a modular manner. Also, the typical lighting controls have been to have a dark data center floor to reduce energy use.

CSE: How does your team work with the architect, owner’s rep and other project team members so the electrical/power systems are flexible and sustainable?

Starr: In most cases I turn to maintenance procedures and equipment life-cycle cost to help project stakeholders make informed decisions. Use of pro/con or choosing by advantage are helpful formats. For example, 15 minutes of battery creates an expensive refresh cycle if the user is only bridging until a stand-by generator closes on the bus. During design meetings many subjects surface that were not the original intent of the meeting. Table those conversations and group like-subjects for future coordination meetings.

We find this approach better structures the frequency of design meetings and avoids meeting without an agenda. Note that architects and owner representatives are focused on a broader project picture than the individual disciplines designing systems. Advocate for ear plug stations, lock-out/tag-out boxes, system one-line and control drawings printed large format and posted in their equipment rooms and lettering the data center to define the whitespace grid (TIA-942 Annex B).

Rener: Typically, mechanical and electrical engineers are in the “drivers’ seat” on data center design. The key with data centers or any mission critical facility is understanding power and cooling demands now and in the future. Working with the architect and owner on early space planning, adjacencies, ceiling heights and MEP equipment move-in and move -our paths. The building core structure/ envelope is often relatively inexpensive compared to MEP systems.

Peterson: By leading with a more scalable, efficient design our engineering teams can define the phases that the data center might have as it evolves throughout its life. The electrical equipment and components can be outfitted with a lower capacity at the beginning, which might accommodate today’s needs, yet still be designed to have flexibility for interspersed higher loads as well as future needs for increased capacity.

CSE: “Resilient” or “resiliency” is a buzzword when discussing data centers. What are owners requesting to make the building meet resiliency goals? How are you designing data centers to be more resilient?

Rener: Resiliency is a very large catch-all term for many of our clients these days, not just mission critical. Previously mission critical focused on terms like uptime, downtime, reliability and redundancy of systems. An example of this would be having N+1 (primary and backup) cooling towers. Resiliency would take this to the next level by asking “how are current and future local microclimate patterns going to affect the operation of those cooling towers.” A similar issue is around flood plains. While many data center look to be located or designed for 500-year events — resiliency might look at whether 500-year events might be moving to 200-year events in some locations due to changes in climate.

Williams: To ensure data centers’ resilience, multiple backup systems must be present to prevents service disruption. This includes redundant power supply (renewable energy system, energy storage facility, local power generators, etc.), redundant server and network system, redundant HVAC or cold storage system like chilled water or phase change storage system, a robust control or automation system, real-time data center monitoring, diagnostic and fault detection and prevention systems and most importantly a highly reliable security system. Automated data centers should be taking advantage of firewalls and cybersecurity system that could reduce the risk of cyberattacks and malware that could paralyze data centers’ control and power system, steal confidential information or cause data loss and/or damage processors.

Peterson: The demand for built-in-resiliency has increased steadily over the last decade and data center clients continue to demand the most robust and reliable systems. We are able to meet the clients’ needs to support the data center during extreme events and provide connectivity to more diverse power sources through microgrid design. As control systems improve, a microgrid system can help anticipate events and optimize performance with the result being that the data center won’t go down during an event, short or prolonged.

Starr: Most owner requests are centered around arc flash concerns, such that installing infrared windows is becoming standard practice. Lithium-ion batteries and modular-like designs are also becoming the new norm. Eaton’s Blackout Tracker is a great tool for discussing similar topics. The metrics (such as outages cause by humans) help users gauge what is best for their data center. AEI design projects go many steps further to help the owner see the value of:

- Enhanced acceptance testing.

- Infrared sensors at electrical terminations (thermal monitoring) — at the end of 2018, my research found the cost for original equipment manufacturer to integrate to be about $250/point ($1,500/fixed breaker or $3,000/rack-able breaker).

- Depending on the geographic location, including not just heaters and thermostats in equipment but humidistats to control the heater depending on humidity.

- Specify requiring the contractor to use torque seal (for example Dykem’s Cross-Check) for critical feeders.

- Considering products like LumaWatt Pro (integral infrared device integrated into light fixtures). This product has an optional Enlighted Space Application (subscription-based) that may be beneficial for security and provides CFD-like information in a trend-able format for the life of the data center.

CSE: When designing lighting systems for these types of structures, what design factors are being requested? Are there any particular technical advantages that are or need to be considered?

Starr: In my experience not much design time is spent deciding light levels or negotiating maintenance factors for data center spaces. Experienced lighting designers seek to achieve an average of 50 footcandles at the floor to aid in detailed tasks such as terminating fiber. The more prominent lighting subject in data centers, beyond feature lighting, is coordination of the hot or cold aisles. BICSI and TIA-942 note minimum rear rack clearance between 2 to 3.28 feet. Having clearance less than 3 feet may require rear doors on equipment racks to be a split configuration.

Note that while 3 feet sounds like enough space, it depends on the data center approach. Consider cable tray, lighting, containment, rack chimneys, overhead busway and rack power strips trying to plug into their receptacles overhead or under the floor. The lighting needs to be prioritized. To ensure this, have a coordination session with all disciplines.