Read this expert Q&A for tips on designing data centers. Look at current trends, plus understand emerging technologies.

Data center insights

- The data center industry is rapidly changing, requiring engineers to be up-to-date with standards and best practices.

- Current data center trends include alternative power strategies, increased AI and sustainability initiatives.

Respondents:

- Chris Barth, PE, Senior Mechanical Engineer, HDR, Inc., Phoenix

- Jarron Gass, PE, CFPS, Fire Protection Discipline Leader, CDM Smith, Pittsburgh

- William Kosik, PE, CEM, LEED AP, Lead Senior Mechanical Engineer ,kW Mission Critical Engineering, Chicago

- Corey Wallace, PE, NICET IV, Principal Engineer – Fire Protection, Southland Industries, Las Vegas

What are some current trends in data centers?

Chris Barth: A primary challenge for many data center projects is power availability from utility providers. Owners and operators are implementing alternate power strategies, such as on-site prime power generation and cost-sharing with utilities. The criteria for site selection have also evolved to focus more on utility power availability and less on network infrastructure.

Jarron Gass: Efficiency is currently the main driver behind construction and usage trends in data centers. The less space a server occupies, the less volume is needed and, consequently, the less there is to cool. Reducing the amount of energy required (both electrical and cooling) lessens the strain on the electrical grid. This, in turn, enables us to pack more computing power into a smaller footprint. With a growing demand for artificial intelligence (AI) and other high-performance computing, it’s essential not only to pursue efficiency but also to emphasize scalability and repeatability. Implementing modular designs allows for quicker initial builds and easier future expansions as demand for data centers rises.

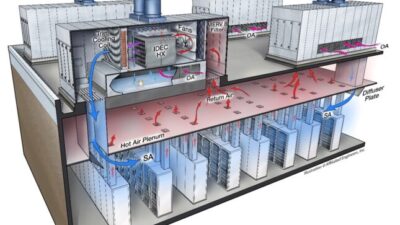

William Kosik: The data center design and construction industry seems to always come across new and innovative ways to power and cool data centers. Currently, one of the biggest issues that I’ve seen is helping our clients determine the type of infrastructure they need to meet their prospective tenants’ requirements. With large hyperscale facilities that offer data halls that range from 12 megawatts to 18 megawatts and with certain tenants that want to lease that space, it is always a challenge to determine how the main infrastructure power and cooling will be designed. Requirements from prospective tenants include strictly defined temperature and moisture redundancy and continuous cooling requirements. The liquid-cooled cabinet has become one of the highest-priority systems to be designed, often creating significant challenges to data center facilities that have already been designed. Within the data environment, high-performance computing is driving up average server rack power densities. Traditional all-air methods of cooling data halls are increasingly being supplemented with direct-to-chip liquid cooling and/or hybrid liquid/air cooling to meet the elevated heat rejection demands of the densified equipment.

What future trends (one to three years) should an engineer or designer expect for such projects?

Chris Barth: Rack power densities will continue to increase as computing demand grows globally. High-density data environments can significantly impact facility planning, power demand and distribution, cooling demand and structural floor loading. Designers and engineers will need to address potentially inadequate traditional design approaches to support the evolving demands of densified racks.

Jarron Gass: It can be difficult to distinguish between current trends and those just over the horizon, but efficiency, scalability and sustainability appear to be the key drivers of near-term decision-making. Where there’s a clear return on investment, green energy will continue to expand, supported by the growing use and capacity of battery storage solutions. Efficiency will come from reducing the physical footprint and lowering energy consumption per unit of output, leveraging refined and evolving technologies. Finally, there’s a strong push for scalability that supports repeatability across a range of sizes.

William Kosik: The window to consider new and innovative design methodologies may be a minimum of three to five years, depending on the building type. For data centers, we may look at a horizon of less than one year. Not only are tenant requirements being revised and improved but the marketplace for cooling equipment specifically made for data centers also is rapidly changing. One example of this is liquid cooling.

How is the growth of cloud-based storage and virtualization impacting co-location projects?

Chris Barth: Speed to market has become critical for co-location operators and their tenants. The availability of data center space is directly tied to a tenant’s ability to support the rapid growth in cloud-based solutions. In addition to ground-up construction, owners are exploring renovating legacy data centers or converting non-data environments to activate servers as fast as possible. Modular and prefabricated construction has helped accelerate new-build facility construction timelines. Major data center clients often have national account agreements with suppliers of large equipment, such as generators. They pre-order these items in bulk and supply the equipment to the contractors for projects, bypassing the traditional contractor-purchase process.

Jarron Gass: The growth of cloud storage and virtualization is fueling increased demand for co-location services, particularly as businesses adopt hybrid cloud strategies. Companies are seeking secure, scalable environments with direct cloud access to ensure seamless connectivity. As high-density workloads become more common, advanced cooling solutions—such as liquid cooling—are increasingly necessary. Edge data centers are also expanding to support low-latency applications like AI, Internet of Things and 5G. Sustainability remains a key focus, with co-location providers integrating renewable energy sources and AI-driven power management systems. Rather than being replaced by cloud services, co-location is evolving into a critical hub for hybrid cloud, edge computing and AI-powered infrastructure.

Corey Wallace: Designs have to be more flexible and robust to accommodate the unique needs of potential co-location tenants.

What types of challenges do you encounter for these types of projects that you might not face on other types of structures?

Chris Barth: Where many facility types have a well-defined program and requirements, the needs of a data center facility are likely to change over the course of design and construction. Successful design and construction teams are agile and adaptable to changing project requirements.

Jarron Gass: Co-location services come with unique challenges, including multi-tenant security risks, limited customization options and potential resource constraints. Unlike private data centers, tenants in co-location facilities share power, cooling and network infrastructure, which can lead to latency issues and raise compliance concerns. Costs may also be less predictable, with possible hidden fees for bandwidth usage, remote hands services and infrastructure upgrades — such as added redundancy. Additionally, businesses must rely on the provider’s security protocols and operational reliability, which may not always be fully transparent, making service disruptions a real concern. While co-location offers scalability and potential cost savings, organizations need to carefully evaluate vendor reliability, implement robust security controls and design effective interconnection strategies to ensure performance and uptime.

William Kosik: One of the highest priority systems is liquid cooling for a client or tenant’s computers. There is a big impact on the data center cooling infrastructure when looking at liquid cooling systems. Not only is the heat dissipation from liquid-cooled cabinets extreme, but the tenant requirements for the liquid cooling systems are also in a state of flux. One example of this is cooling distribution unit (CDU) redundancy and how many cabinets are served from the CDU. Another challenge is dealing with a transient failure of the chiller or chilled water system, which requires a very close analysis based on the tenant’s requirements. For some designs and facilities, it is possible a continuous cooling system is not needed. But, for other facilities and densities a continuous cooling system, which comprises large on-site storage tanks, is needed with an uninterrupted power supply to the central processing unit and other piping distribution requirements.

Corey Wallace: The presence of lithium-ion batteries can result in larger sprinkler system piping; a larger electric fire pump and increased power demands due to the larger fire pump. A diesel fire pump selection must account for a larger footprint, ventilation and exhaust discharge considerations. Concrete tee structures can create restrictions on where to support sprinkler piping and limit routing options. Cable tray/support steel below ceilings can generate obstructions to the sprinkler spray pattern if located within 18 inches of the sprinkler deflector. More fire sprinklers may be required to accommodate the obstructions, therefore requiring larger pipes and potentially a larger fire pump.

What are professionals doing to ensure such projects (both new and existing structures) meet challenges associated with emerging technologies?

Chris Barth: Facilities and campuses that have flexibility and scalability as core design criteria will be best suited to adapt to emerging technologies. Predicting adoption cycles of future trends and technology in industries that move as fast as the data center industry is extremely difficult. However, designers, owners, and construction professionals can safely assume that the computing technology within these facilities will be replaced several times during the building’s lifecycle. Providing adaptable building programs and systems sets building owners up for success in this rapidly changing landscape.

Jarron Gass: In an industry where speed is critical, design professionals are upgrading and building data centers to integrate as much new technology as possible, often focusing more on efficiency and scalability than strictly on cost-effectiveness. Being the first to go live offers a competitive advantage, enabling early revenue generation that can fund continued expansion. This urgency is fueling the push for scalable and modular design approaches, which streamline both engineering and implementation. Even when navigating the complexities of varying state building and fire codes, or site-specific challenges like seismic or flood zones, modularity allows teams to adapt quickly while maintaining momentum.

Corey Wallace: Fire protection engineers must be proactive and ask questions to the end user. Systems must be designed with excess capacity to provide reasonable flexibility.

In what ways are you working with information technology (IT) experts to meet the needs and goals of a data center?

Chris Barth: IT professionals are essential for the success of any data center project. Their expertise and understanding of the underlying networks of a facility and how to secure them are crucial. IT professionals also often establish the fiber distribution design for data centers, one of the most critical aspects of facility infrastructure. IT networks for AI data centers are far more robust than that of traditional cloud computing data centers. The direct and indirect infrastructure required to support these networks can have a significant impact on building elements and systems.

Jarron Gass: IT experts who are deeply immersed in emerging data center trends and technologies provide valuable insight into floor plan layouts, which helps optimize equipment placement and ensures efficient and, where necessary, redundant connectivity. These professionals serve as a central hub of information throughout the design and integration process, helping to ensure both feasibility and constructability. A skilled IT expert collaborates closely with project or technical managers to streamline design and construction efforts, ultimately contributing to a more coordinated and successful project delivery.

Describe a co-location facility project. What were its unique demands, and how did you achieve them?

Corey Wallace: I worked on a two-story, approximately 249,000-square-foot, multi-phase data center. The three distinct phased building structures were 71,144 square feet, 62,460 square feet and 115,396 square feet respectively. The phasing created many challenges. The initial fire pump was designed and selected based on the needs of the first phase. The phase two expansion utilized a different stationary storage battery system and therefore required a larger fire pump to accommodate the anticipated fire demand. The location of the phase one fire pump created more pipes that needed to be routed through a very congested utility corridor. The data halls also utilized cable trays and support steel below ceilings. In the end, a new, larger fire pump was added to the building to accommodate the larger flow as well as future battery system options. The data hall cable tray/support steel was lowered to greater than 18 inches below the sprinkler deflectors. The below-ceiling cable trays were maintained at less than 48 inches wide.

How are engineers designing these kinds of projects to keep costs down while offering appealing features, complying with relevant codes and meeting client needs?

Chris Barth: Working with experienced construction teams and cost estimators throughout design is an important aspect of maintaining constrained project budgets. In a landscape with variable lead times, material and labor costs, as well as emerging technology considerations, early involvement with trade partners can help identify opportunities for savings without sacrificing facility functionality. Pre-fabrication, offsite pre-assembly and utilizing modular equipment assemblies are methods for how teams can provide cost-effective designs and installations.

William Kosik: The primary type of clients I’m personally working with are data center developers, who are most interested in building large data center facilities that will be leased from different companies. While all of the design and analysis work is for the developer, it is mainly done for the tenants that might come in. So, there is friction between providing systems that are beyond the requirements of the current tenants who have to pay upfront, while keeping the system design and construction that will meet the minimum requirements of the tenant. In both cases, there will be some revisions to the data halls depending on the tenant. The trick is to understand the client’s needs ahead of time so the facility is not over or under designed. The results of this process often require modifications to the building after it is under construction.

Jarron Gass: Most cost-effective options and savings come from the repeatability of data center designs across multiple sites. Developing prototype designs, whether for specific components or entire facilities, enables faster deployment with minimal effort, especially when it comes to vertical construction. This approach supports rapid design adaptation through relatively simple modifications, such as mirroring or reconfiguring layouts to accommodate the unique geography of a given site.

Corey Wallace: Fire suppression and fire alarm are often considered deferred submissions. Adding these contractors to the early design process with other mechanical, electrical and plumbing disciplines allows collaboration and reduces rework. By obtaining early input, more effective space planning and programming can reduce nonvalue-added costs such as poor fire riser room locations and ceiling obstructions.

How are you preparing for future phases of data center operation? How do you specifically address this challenge when the first phase is still operational?

Chris Barth: Phasing is a critical aspect of many data center and campus designs. Understanding phasing requirements early in the design process is a critical first step in establishing seamless operability throughout the phased construction. Construction activities can potentially carry a risk of disruption to the service of existing data environments. To avoid disruptions, systems must operate in a way in which active phases are reliably isolated from the construction and commissioning of the later phases. Temporary power and cooling may be required for some aspects of the facility to act as a bridging solution while construction of later phases is being completed.

Careful considerations for construction activities, computing and support equipment, move-in pathways and facility maintenance must be incorporated into the design of early phases. If planning does not provide provisions for construction to continue for months or years on sight, early-phase operations can easily be disrupted.

Corey Wallace: I ask a lot of probing questions to understand what the future needs are and try to anticipate future site conditions. I request the desired vision of the future spaces and provide a detailed description of risks, potential impacts to operations and challenges that cannot be resolved. Some risks cannot be removed, but providing clear explanations of why and developing a plan to mitigate the impact reduces future frustration from the owner and/or operator.