Data Centers

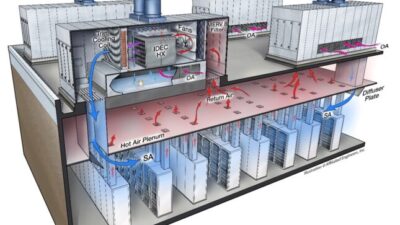

A data center is a physical facility used to house and manage the critical infrastructure and technology needed to support the storage, processing and networking of data. These facilities are typically designed to support the operation of a large number of computers and servers, as well as the storage of data in the form of databases and file systems. Data centers are usually equipped with redundant power supplies, backup generators and cooling systems to ensure that they can operate continuously and reliably. They also often have multiple layers of physical and digital security to protect against unauthorized access and data breaches. Data centers are used by businesses, government agencies and other organizations to store, process and manage large amounts of data. They can range in size from small server rooms to large, purpose-built facilities with multiple floors and thousands of servers.