In a data center, the efficiencies of the cooling, power, and IT systems should be analyzed as a complete system so the true efficiency potential becomes apparent.

Since 2005, the data center market has matured significantly with respect to an overall understanding of the drivers of energy efficiency. Several private organizations in the United States and Canada (ASHRAE, Green Grid, Green Globes) and worldwide (CIBSE, Japan CASBEE, Australia Green Star) have developed robust standards and criteria aimed at making buildings and data centers more energy and water efficient. These standards and criteria work well in developing a decision-making framework in both new data center design and retrofit projects. The release of the standards and metrics is timely as we begin to see a proliferation of local, state, and federal energy-efficiency guidelines and programs.

As an example, Executive Order 13514, Federal Leadership in Environmental, Energy, and Economic Performance, signed by President Obama Oct. 5, 2009, outlines a mandate for reducing energy consumption, water use, and greenhouse gas emissions in U.S. federal facilities. While it also presents requirements for reductions for items other than buildings (vehicles, electricity generation, etc.), the majority of the order is geared toward the built environment. Related to data centers specifically, and the impact that technology use has on the environment, there is a dedicated section on electronics and data processing facilities. An excerpt from this section states, “[Agencies should] promote electronics stewardship, in particular by implementing best management practices for energy-efficient management of servers and federal data centers.”

Although the Executive Order is written specifically for U.S. federal agencies, the broader data center industry also is entering the next era of energy and resource efficiency: strongly encouraged or compulsory reductions in resource use and greenhouse gas emissions. The new U.S. Environmental Protection Agency Energy Star Data Center Energy Efficiency Initiative is an example of a program that will quickly gain momentum in the private sector by showing business value similar to the other Energy Star programs. This program is designed to raise the energy-efficiency bar as the portfolio of data centers is updated; only facilities in the top 25% can achieve an Energy Star rating. So as more facilities earn higher energy ratings, the top 25% will be reserved for only the best of the best.

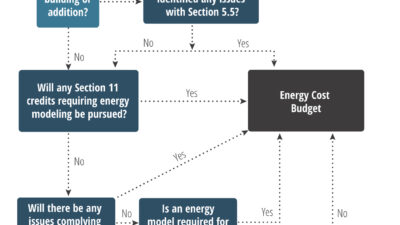

Another highly anticipated program that will help the data center design and construction industry achieve energy and water efficiency is coming from the U.S. Green Building Council. LEED Data Centers is available for second public comment through July 2011. It is based on the current LEED standards, Green Building Design and Construction and Green Building Operations and Maintenance, but has credit modifications that customize it solely for use in data center facilities. The credits in the new standard have a strong focus on energy efficiency, reducing cooling tower water use, measurement and verification of power and cooling systems, and commissioning. Once this program is released for public use, achieving LEED certification will only be possible after following a rigorous and thorough process.

The Executive Order, Energy Star for Data Centers, and LEED Data Centers are just a few examples of programs shaped to reduce energy use in data centers. Knowing that the industry is continually raising the bar (and doing it quickly) on energy efficiency, strategies that go beyond the typical system-specific, incremental energy efficiency improvements will be the only way to make a big impact.

Energy efficiency strategies

In a data center, the cooling, power, and IT as stand-alone systems would ordinarily be designed to minimize energy usage. However, not until the efficiencies of the three systems are analyzed as a complete system does the true efficiency potential become apparent. The procedure to develop accurate efficiency metrics for a data center follows a robust and methodical process using industry-recognized energy simulation algorithms resulting in solid, engineering-based annual energy use and power usage effectiveness (PUE) values. The following items illustrate some of the more important aspects to consider during the planning and design of a data center:

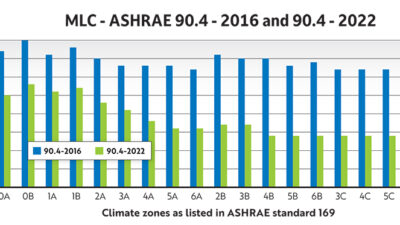

Understand and analyze the interdependency of climate and economizers. Climate will impact energy use in a data center. When economizers are factored in, it becomes a much larger impact. In this context, economizers are defined as water, air, and evaporative; direct and indirect. The indirect systems use different types of heat transfer such as fixed plate heat exchangers, heat wheels, and evaporatively cooled heat exchangers. Each will offer a different level of performance in different geographical locations.

Select cooling and power equipment with the lowest energy consumption possible with particular focus on efficiency at part-load operating conditions. This includes highly efficient compressorized equipment, UPS, and transformers. After this, understanding the efficiency of the equipment at part load and how often the computers will run at part load will assist in making the optimal equipment selection. To illustrate this, look at the following analyses of different UPS technologies and electrical system designs.

The electrical systems all have different efficiency ratings at different loading levels. At a load level of 40%, the efficiencies range from 78% to 87%. At a load level of 80%, the efficiencies range from 84% to 90%. Modular, scalable design is the best starting point for dealing with part-load efficiency (Figure 2).

Do not work against the external environment; analyze climate data to determine how the climate can play a role in lowering annual energy use. This is a very important idea that must be taken into account when performing site selection and determining the appropriate cooling system and economization techniques. Cool and dry climates will result in annual energy consumption values that are very different than a hot, humid climate or a hot, dry climate. Locations that have large differences in the dry-bulb and wet-bulb temperatures (called the wet-bulb depression) are best suited for evaporative cooling strategies. This is especially true of arid climates where evaporative cooling can allow for significant reductions in dry-bulb temperatures without using compressors. Even in climates that tend to have hot, humid summers, using sensible energy recovery will still result in a high percentage of hours on an annual basis that do not need mechanical cooling. This is an important concept because data centers use much higher discharge air temperatures than facilities where standard comfort cooling techniques are used. This extends the number of hours that an energy recovery air handling unit can be used.

In addition to optimizing airflow across the computer equipment, manage internal heat gains of the power and cooling systems themselves. Fans in air-handling units use electricity; they also emit heat to the airstream. High-efficiency fan motors use less electricity than standard efficiency fans, but they also emit less heat into the airstream. Using dc motors and/or variable speed motors also requires less energy, but this energy savings must be balanced with the losses incurred at the variable frequency drive and ac to dc conversion equipment.

Design for the highest internal air temperature allowable that will not cause the computers’ internal fans to run at excessive speeds or create electrical leakage in the computers. The ASHRAE supplement to the Thermal Guidelines for Data Processing Environments (ASHRAE 2004), called 2008 ASHRAE Environmental Guidelines for Datacom Equipment – Expanding the Recommended Environmental Envelope recommends an upper dry-bulb limit of 80 F for the air used to cool the computers. If this temperature is used (and even higher temperatures in the near future), the hours for economization will be increased; when vapor compression (mechanical) cooling is used, the elevated temperatures will result in lower compressor power. However, the onboard fans in the servers will typically begin to increase speed and draw in more air to maintain the temperature of the server’s internal components at an acceptable level. Also, depending on the internal heat sink specification, electrical leakage will begin to occur at elevated temperatures, eroding away some of the energy savings. Unless the internal thermal management algorithms are modified, there will be diminishing energy returns as more power is required for the fans in the servers when the data center supply air temperature exceeds 80 F. The exception to this is if an economizer is used on the cooling system, providing cooling air beyond 80 F without any compressor power (Figure 3).

Design for the widest humidity band (lowest low and highest high) allowable that will provide a safe operating environment for the computers, minimizing the risk of electrostatic discharge (ESD) and corrosion of internal components. Adding/removing moisture to/from the air in data centers uses energy. If the band between the recommended minimum and maximum humidity levels is large enough, many climates will have outdoor air moisture levels in which a significant amount of hours will be within tolerance, requiring that no moisture be added or removed. Using dedicated dehumidification air handling units in more humid climates can be a more efficient method of moisture removal than using individual cooling units located throughout the facility. Using desiccants to remove moisture from the air and using the heat exhausted from the computer equipment to recharge the desiccants can result in maximum energy savings by eliminating mechanical cooling and reusing the waste heat from the computers.

For adding moisture to the air in very dry climates, adiabatic humidification equipment, such as evaporative or spray humidifiers, consumes 20 to 200 times less energy than standard electric humidifiers. In addition to selecting the most appropriate equipment, the design setpoints will also impact the annual water usage. The current ASHRAE recommendations indicate a minimum moisture level of 41 grains per pound of air. Using building performance simulation techniques that generate hourly energy and water use, a parametric analysis of different indoor moisture values will yield annual water use for each of the humidity setpoint options (Figure 4).

In addition to electricity usage, analyze annual and peak water consumption and employ methods of reducing water usage of humidification and cooling tower water makeup systems. A rule of thumb useful in the early planning stages for estimating water use in a data center is 0.50 gal/kWh of total electricity usage. A 1 MW data center with a PUE of 1.50 running at full load for one year will consume 13 million kWh and, using the rule of thumb, will consume 6.5 million gal of water annually. In order to determine water consumption reduction by lowering the minimum humidity levels, increasing the cycles of concentration for the cooling tower blow-down cycle, and minimizing drift in the cooling tower, a detailed hour-by-hour simulation is required; savings of 10% to 25% of total annual water consumption are possible (Figure 5).

When possible, choose source energy that has the lowest greenhouse gas (GHG) emissions, uses the least amount of water, and has the highest energy conversion efficiency. While not strictly related to the energy efficiency of data center, it is important to determine the electricity provider in the locations being considered for the data center and the breakdown of renewable energy sources and fossil fuels in the production of electricity. This will help in understanding the indirect CO2 emissions associated with powering the data center. As an example, looking at the indirect CO2 emissions of not only the primary data center but also the secondary sites within an IT enterprise will give a more complete picture of the environmental impact of the enterprise. This analysis should account for the different CO2 emission rates that correspond to different electricity generating stations. Additions (and reductions) to IT load in the facilities should be estimated to determine a longer-term view of the indirect CO2 emissions (Figure 6).

What’s next?

During the process of fine-tuning data centers to optimal levels using the least amount of electricity and water possible, it is probable that new metrics will emerge that focus on GHG emissions for IT applications (such as e-mail or payroll) and supply chain (all of the vendors and equipment used to develop a final product or service).

Why is this important? Because the power and cooling energy is anywhere from 20% to 50% of the total energy required to run the IT operation, this is an area that will be targeted in reducing greenhouse gas emissions. (The greenhouse gases associated with processes such as electricity generation, vehicular transportation, wastewater processing, and solid waste disposal are CO2, CH4, N2O, HFCs, PFCs, and SF6, measured in metric tons.) Also, supply chain reporting is a good way to gauge an enterprise’s energy and environmental impact. Companies that use supply chain energy and GHG intensity as a way to differentiate their products and services will be in a good position as GHG reporting emerges as a widely used process. In the meantime, it will continue to be important to focus on an integrated process that allows for a synergistic approach to reducing data center energy and water intensity.

Kosik is a principal data center energy technologist with HP’s Technology Services Strategy group. He is a member of the Consulting-Specifying Engineer editorial advisory board.